Deepfakes Decoded #5: Doctors, Deepfakes, and the Danger to Public Health

Examining the Invisible Threats that Jeopardize the Integrity of Healthcare Professionals and Public Health

Quick Update!

Over the course of the last four editions, we've examined the troubling implications of deepfakes across various sectors, from corporate brands to politics. Recognizing the urgent need for action, Paul Vann and I have founded IdentifAI to tackle these problems head-on. This robust deepfake protection technology aims to safeguard not just the integrity of individuals across these domains, but also the institutions they represent.

IdentifAI is a novel approach to address the challenge of deepfakes by emphasizing accurate image authentication over typical detection methods. Utilizing proprietary contour algorithms and machine learning techniques, our platform offers a streamlined user interface for managing and monitoring image veracity in real-time. We present a cost-effective and scalable solution, distinct from the high costs of traditional, AI-based detection models. We plan to focus on individuals at high risk including musicians, celebrities, influencers, and politicians along with established brands and social platforms.1 IdentifAI preserves users’ digital image integrity with an unmatched level of confidence and assurance.

We would really appreciate readers signing up to follow along on our journey to help address this significant issue!

Please be sure to confirm your subscription!

Deepfakes Decoded

Deepfakes Decoded is an ongoing series that delves into the multifaceted challenges of deepfake technology. Whether you've been with us from the very first blog or are just now tuning in, we aim to bring you a nuanced understanding of the pressing challenges deepfakes can pose. They have the potential to significantly impact various aspects of society, including politics, entertainment, and more. In this fifth installment, we focus on another important persona initially highlighted at the start of this series, doctors and medical professionals. As with the influencers, politicians, and celebrities we've scrutinized in prior editions, these trusted figures are not exempt from the perils of deepfake manipulation. Such distortions could risk more than just their personal reputations; they could lead to misinformation among patients, disrupt established medical protocols, and even jeopardize public health. At a time when faith in medical expertise is more critical than ever, grasping the stakes of deepfakes in this sector is imperative.

Doctors, Deepfakes, and the Danger to Public Health

From the Operating Room to Your Feed

Let's start by emphasizing that deepfakes have no place in the operating room, clinical consultations, or any in-person medical services. The real peril emerges as medical professionals, researchers, and public health officials increasingly turn to social media platforms, including TikTok, to voice their opinions and share information. This phenomenon has been notably fueled by global crises such as the COVID-19 pandemic. The risk, therefore, is not in the clinical work these professionals perform but in how deepfake technology can manipulate and distort their public, online personas.

Doctors as Digital Thought Leaders: A Double-Edged Sword

The visibility of becoming an influencer on social media platforms like Twitter, LinkedIn, YouTube, and TikTok comes at a cost. Being in the spotlight makes them prime targets for deepfake attacks that can tarnish both their professional and personal lives. A manipulated piece of content could falsely show a doctor endorsing harmful products or pushing false medical information, eroding public trust not only in the individual but also in the medical community at large. This new role as a digital thought leader presents unique challenges, requiring heightened awareness and advanced technological solutions to protect against the malicious use of deepfake technology.

A Tarnished Image: The Exploitation of Doctor Personas

Imagine a scenario where the likeness of well-respected doctors is deepfaked to endorse questionable health products online. These aren't just edited photos; these are deepfake videos where real doctors' faces are replaced with digitally-created visages, all the while maintaining the illusion that these medical professionals are endorsing these products or services.

While these fabricated endorsements accumulate millions of views, the implications are disastrous for the medical community. Real physicians, whose images are appropriated for these deceptive practices, face an erosion of their personal and professional credibility. These individuals can be thrust into legal battles to reclaim their identity and are subject to undue scrutiny, both from the public and potentially medical boards.

Moreover, this scheme serves to dilute the authority of doctors as credible sources of information. Even if these videos are debunked quickly, the impact on public opinion is long-lasting. It extends beyond the affected individuals, generating skepticism that ripples throughout the medical community, complicating public health campaigns and undermining patient-doctor relationships. This deceptive practice adds a new layer of complexity to the already challenging landscape that doctors navigate online. It calls for not only legal safeguards but also a renewed emphasis on digital literacy, both from the medical professionals and the public they serve.

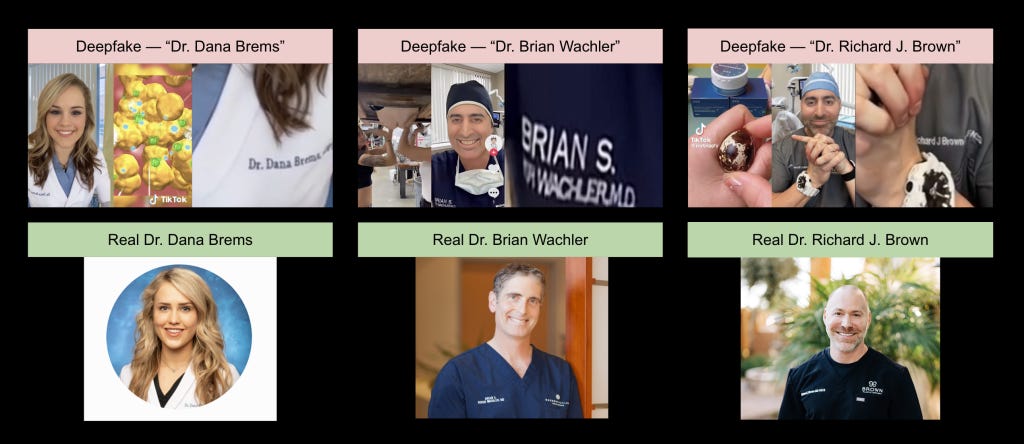

As a prime example of what can occur, Dr. Richard J. Brown, Dr. Dana Brems, and Dr. Brian Boxer Wachler have all fallen victim to deepfakes on TikTok. The content creators took original videos from these legitimate physicians and applied deepfake methods to alter their appearances. In one case below, the counterfeit version of Dr. Brems seemingly features the face of popular singer Selena Gomez.2

Consequences and Ramifications

Public Mistrust

The circulation of falsified media can cultivate a climate of mistrust. This not only casts a shadow over the individual physician who was targeted but also raises general skepticism toward the broader medical community. This heightened doubt makes it increasingly challenging to promote critical health information, engage the public in medical initiatives, or effectively manage health crises.

The issue of eroding public trust in the medical community has been magnified by recent experiences. The COVID-19 pandemic served as a stark example, bringing forth a flood of misinformation and disinformation that pushed some segments of the population to increasingly doubt established scientific facts and expert guidance. This existing skepticism serves as a fertile ground for deepfake technology to further sow seeds of mistrust. The public may find it increasingly difficult to separate fact from fiction. This makes the challenge of disseminating accurate medical information even more daunting and raises the stakes for combating misinformation in times of crisis.

The Economic Ripple Effect

While the field of healthcare is essential and therefore somewhat insulated from drastic declines in public engagement, the erosion of trust due to deepfakes can have tangible effects. Clinics and hospitals where these targeted professionals practice may experience a subtle but impactful reduction in patient trust. This could manifest in various ways: a hesitancy to follow medical advice, a drop in elective procedures, or a reluctance to participate in preventative healthcare programs. While it's unlikely that there will be a significant dip in patient numbers given the indispensable nature of healthcare services, these smaller shifts can accumulate over time, affecting not only the quality of healthcare delivery but also the financial health of these institutions.

Fighting Back: The Imperative for Action

To combat this emerging menace, strong verification protocols for online medical information are essential. Medical associations might also consider formulating guidelines on social media usage for professionals to adhere to, ensuring that their online presence is less susceptible to malicious tampering.

Social media provides a platform for medical professionals to combat misinformation. Yet, the same platforms become battlegrounds where their reputation and credibility are at stake. Navigating this duality becomes an ever-present challenge in the modern era.

The Future Landscape: AI and Healthcare

As AI advances, its role in healthcare is celebrated for improving diagnostics and treatment plans. However, AI's darker side of deepfakes cannot be ignored. It necessitates that both technologists and healthcare providers collaborate to find solutions that protect against malicious AI applications. On the other hand, generative artificial intelligence will also dramatically improve medicine. Radiology, protein synthesis, and pharmaceutical drug creation amongst many others are all areas that can and will be rapidly advanced in the next few years due to the use of generative adversarial networks.

A study from Taipei Medical University34 helps demonstrate how deepfake technology isn't always a force for harm. The researchers used a highly accurate facial emotion recognition system to create videos that altered the facial expressions of Parkinson’s disease patients. This tech aims to improve doctors' empathy and ability to read patient emotions, all while protecting patient privacy. Edward Yang, the study's first author, mentioned that their real-world video database originally aimed to show how facial emotion recognition could evaluate emotional changes between doctors and patients during clinical interactions.5 Looking ahead, more research is needed to see how the system can objectively analyze doctors' reactions to patients' expressions, and vice versa. Overall, the technology showed over 80% accuracy in real-world tests, suggesting a promising avenue for positive applications of deepfake tech.

On the drug development front, the pharma and biotech industries have embraced the use of generative AI and deepfakes. Head of AI platforms at Insilico Medicine, Dr. Petrina Kamya, notes that deepfake technology isn't directly involved in curing diseases. However, she emphasizes that "it can play an important role in generating data where it is lacking or in generating ideas in terms of novel chemistry."6 The takeaway here is that, despite the ethical concerns surrounding deepfakes, this technology holds a dual-edged promise. It has the capacity not just to deceive, but also to drive scientific innovation, offering new avenues for data creation and idea generation in critical sectors of society.

Final Thoughts: A Call for Caution

The digital and professional lives of medical professionals are merging in complex ways, compounded by the looming threat of deepfake technology. Guarding against this requires collective vigilance, proactive technological solutions, and perhaps, a revisiting of the ethical codes that guide not just medical practice but human interaction in a digital age.

Bonus Content:

I want to quickly touch on something outside of the focal points of this particular post. I hadn't intended to delve into the intricate global affairs currently unfolding, but a statement by David Friedberg on the latest All In Podcast compellingly highlights the far-reaching consequences of online content, regardless of its factual accuracy. While this quote had nothing to do with deepfakes, he drives home the fact that seeing something online can rapidly develop into confirmation bias. Deepfakes pose a significant risk to the broader internet and its users because these images, videos, and sound bites are likely to reinforce the confirmation bias mentioned below.

“The hospital bombing. I don’t know if it matters that we get the corrections from all these people that may have said something that turns out not to be true because it was almost like that media became confirmation bias for people that already felt that this is what was going on and this was simply evidence of what is going on and it justifies the next step. It justifies the beliefs. It justifies the morality, and if it wasn’t this it’s going to be something else…therefore anything [someone] sees is confirmation bias for [their] belief.”

- David Friedberg

Think a little deeper.

This is a Stream of Uncommonness.

Justin

Focal points and target markets are subject to change

https://www.mediamatters.org/tiktok/deepfake-doctors-are-selling-sketchy-health-products-tiktok

https://www.jmir.org/2022/3/e29506

https://arxiv.org/pdf/2003.00813.pdf

https://www.mddionline.com/artificial-intelligence/medical-deepfakes-are-real-deal

https://www.siliconrepublic.com/innovation/deepfake-ai-healthcare-diseases-insilico-medicine-pharma